Overview of modern volume rendering techniques for games – Part I

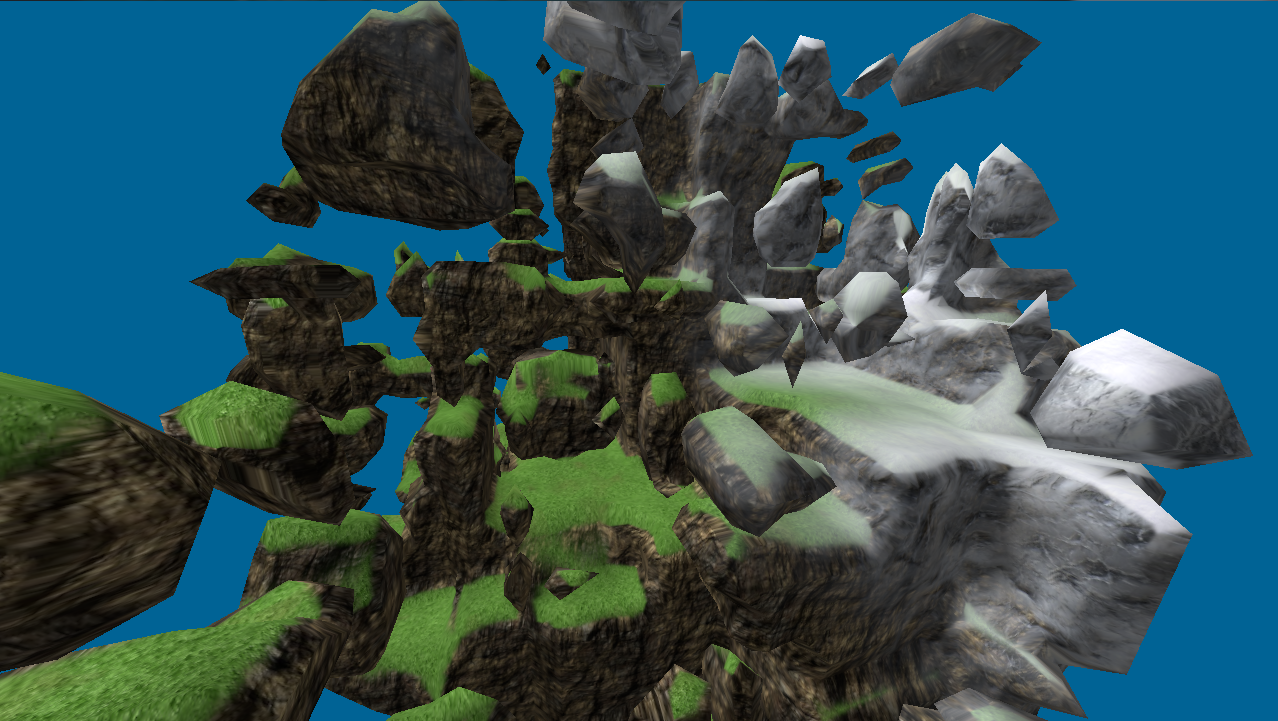

A couple of months ago Sony revealed their upcoming MMO title “EverQuest Next”. What made me really excited about it was their decision to base their world on a volume representation. This enables them to show amazing videos like this one. I’ve been very interested in volume rendering for a lot of time and in this blog series I’d like to point at the techniques that are most suitable for games today and in the near future.

In a series I’ll explain the details of some of the algorithms as well as their practical implementations.

This first post introduces the concept of volume rendering and what are it’s greatest benefits for games.

Volume rendering is a well known family of algorithms that allow to project a set of 3D samples onto a 2D image. It is used extensively in a wide range of fields as medical imaging (MRI, CRT visualization), industry, biology, geophysics etc. It’s usage in games however is relatively modest with some interesting use cases in games like Delta Force, Outcast, C&C Tiberian Sun and others. The usage of volume rendering faded until recently, when we saw an increase in it’s popularity and a sort of “rediscovery”.

In games we usually are interested just in the surface of a mesh – it’s internal composition is seldom of interest – in contrast to medical applications. Relatively few applications selected volume rendering in place of the usual polygon-based mesh representations. Volumes however have two characteristics that are becoming increasingly important for modern games – destructibility and procedural generation.

Games like Minecraft have shown that players are very much engaged by the possibility of creating their own worlds and shaping them the way they want. On the other hand, titles like Red Faction place an emphasis on the destruction of the surrounding environment. Both these games, although very different, have essentially the same technology requirement.

Destructibility (and of course constructability) is a property that game designers are actively seeking.

One way to achieve modifications of the meshes is to apply it to the traditional polygonal models. This proved to be a quite complicated matter. Middleware solutions like NVIDIA Apex solve the polygon mesh destructibility, but usually still require input from a designer and the construction part remains largely unsolved.

Volume rendering can help a lot here. The representation of the mesh is a much more natural 3D grid of volume elements (voxels) than a collection of triangles. The volume already contains the important information about the shape of the object and it’s modification is close to what happens in the real world. We either add or subtract volumes from one another. Many artists already work in a similar way in tools like Zbrush.

Voxels themselves can contain any data we like, but usually they define a distance field – that means that every voxel encodes a value indicating how far we are from the surface of the mesh. Material information is also embedded in the voxel. With such a definition, constructive solid geometry (CSG) operations on voxel grids become trivial. We can freely add or subtract any volume we’d like from our mesh. This brings a tremendous amount of flexibility to the modelling process.

Procedural generation is another important feature that has many advantages. First and foremost it can save a lot of human effort and time. Level designers can generate a terrain procedurally and then just fine-tune it instead of having to start from absolute zero and work out every tedious detail. This save is especially relevant when very large environments have to be created – like in MMORPG games. With the new generation of consoles with more memory and power, players will demand much more and better content. Only with the use of procedural generation of content, the creators of virtual worlds will be able to achieve the needed variety for future games.

In short, procedural generation means that we create the mesh from a mathematical function that has relatively few input parameters. No sculpting is required by an artist at least for the first raw version of the model.

Developers can also achieve high compression ratios and save a lot of download resources and disk space by using procedural content generation. The surface is represented implicitly, with functions and coefficients, instead of heightmaps or 3D voxel grids (2 popular methods for surface representations used in games). We already see huge savings from procedurally generated textures – why shouldn’t the same apply for 3D meshes?

The use of volume rendering is not restricted to the meshes. Today we see some other uses too. Some of them include:

– Global illumination (see the great work in Unreal Engine 4)

– Fluid simulation

– GPGPU ray-marching for visual effects

In the next posts in the series I’ll give a list and details on modern volume rendering algorithms that I believe have the greatest potential to be used in current and near-future games.

Follow Stoyan on Twitter: @stoyannk